Developer's Guide to Modularized Indexes

eXist-db provides a modular mechanism to index data. This eases index development and the development of more-or-less related custom functions (in Java).

Introduction

eXist ships with four index types based on this mechanism:

- Lucene Range index

-

An index providing range and field based searches on an index built with Apache Lucene..

- Lucene Full-text index

-

A Full text search index which uses Apache Lucene for indexing and query.

- NGram index

-

An NGram index will store the N-grams contained in the data's characters. For instance, if the index is configured to index 3-grams,

<data>abcde</data>will generate these index entries:-

abc

-

bcd

-

cde

-

de␣

-

e␣␣

-

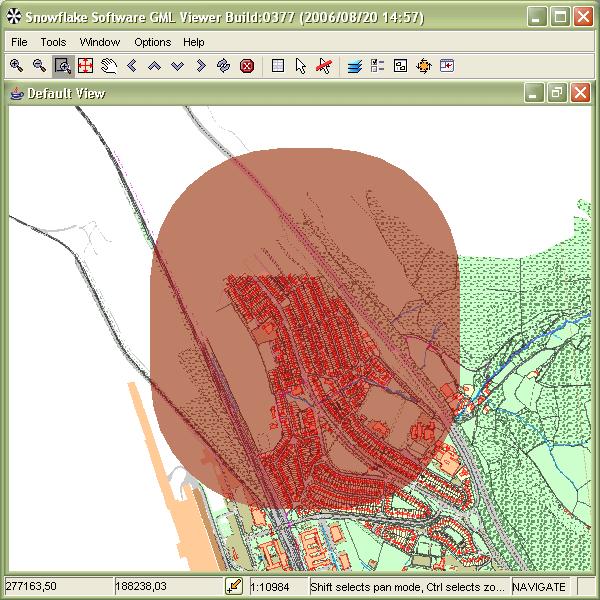

- Spatial index

-

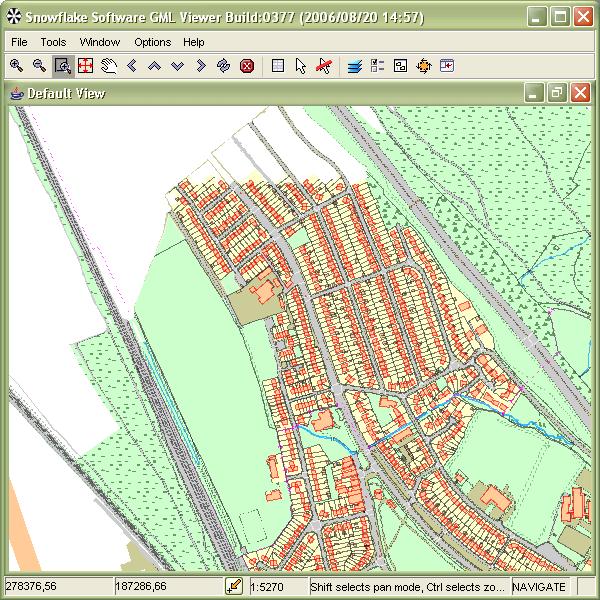

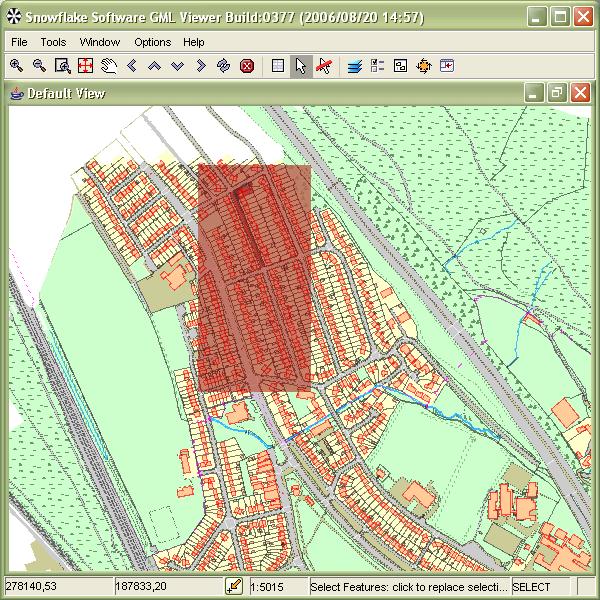

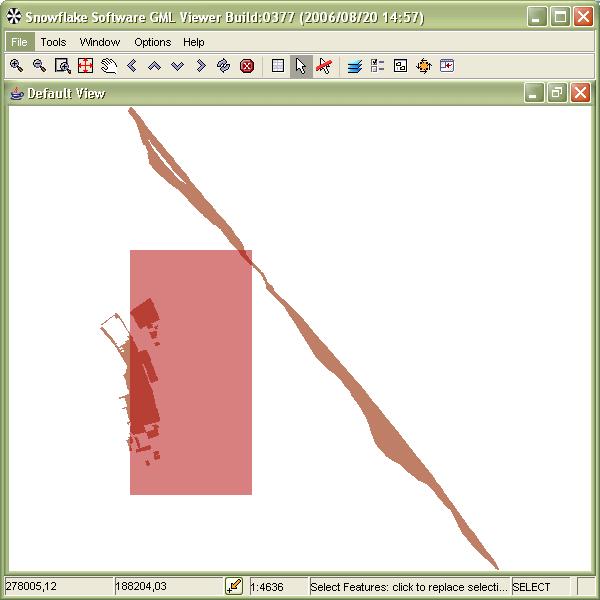

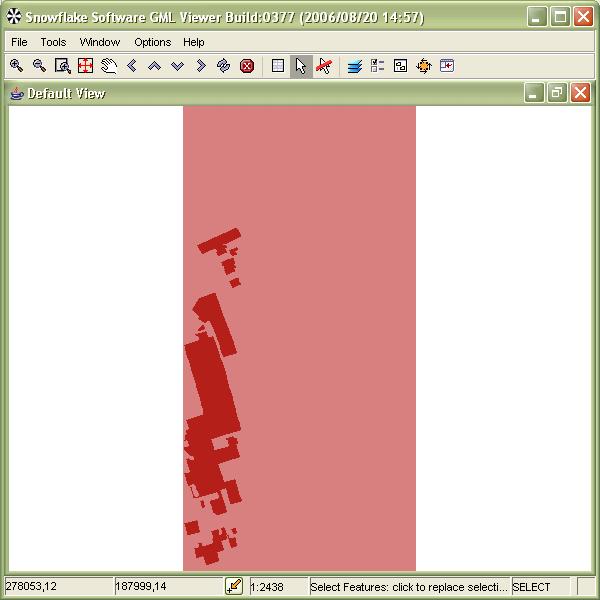

A spatial index that stores some of the geometric characteristics of Geography Markup Language geometries (tested against GML version 2.1.2). For instance:

<gml:Polygon xmlns:gml="http://www.opengis.net/gml" srsName="osgb:BNG"> <gml:outerBoundaryIs> <gml:LinearRing> <gml:coordinates> 278515.400,187060.450 278515.150,187057.950 278516.350,187057.150 278546.700,187054.000 278580.550,187050.900 278609.500,187048.100 278609.750,187051.250 278574.750,187054.650 278544.950,187057.450 278515.400,187060.450 </gml:coordinates> </gml:LinearRing> </gml:outerBoundaryIs> </gml:Polygon>This will generate several index entries. The most important ones are:

-

The spatial referencing system (

osgb:BNGfor this polygon) -

The polygon itself, stored in a binary form (Well-Known Binary)

-

The coordinates of its bounding box

-

Classes

Below is a technical explanation of the java classes that lets you interact with exist's indexing features.

org.exist.indexing.IndexManager

The indexing architecture introduces a package,

org.exist.indexing, responsible for index management. It is

created by org.exist.storage.BrokerPool which allocates

org.exist.storage.DBBrokers to each process accessing each DB

instance. Each time a DB instance is created (most installations generally have only

one, most often called exist), the

initialize() method contructs an IndexManager

that will be available through the getIndexManager() method of

org.exist.storage.BrokerPool.

public IndexManager(BrokerPool pool, Configuration config)

This constructor keeps track of the BrokerPool that has created

the instance and receives the database's configuration object, usually defined in an

XML file called conf.xml. This new entry is expected in the

configuration file:

<modules>

<module id="ngram-index" class="org.exist.indexing.ngram.NGramIndex" file="ngram.dbx" n="3"/>

<module id="spatial-index" class="org.exist.indexing.spatial.GMLHSQLIndex" connectionTimeout="100000" flushAfter="300"/>

</modules>This defines two indexes, backed-up by their specific classes

(class attribute; these classes implement the

org.exist.indexing.Index interface as will be seen below),

eventually assigns them a human-readable (writable even) identifier and passes them

custom parameters which are implementation-dependant. Then, it configures (by

calling their configure() method), opens (by calling their

open() method) and keeps track of each of them.

org.exist.indexing.IndexManager also provides these public

methods:

public BrokerPool getBrokerPool()

This returns the org.exist.storage.BrokerPool for which this

IndexManager was created.

public synchronized Index getIndexById(String indexId)

A method that returns an Index given its class identifier (see

below). Allows custom functions to access Indexes whatever their

human-defined name is. This is probably the only method in this class that will be

really needed by a developer.

public synchronized Index getIndexByName(String indexName)

The counterpart of the previous method. Pass the human-readable name of the

Index as defined in the configuration.

public void shutdown()

This method is called when eXist shuts down. close() will be

called for every registered Index. That allows them to free the

resources they have allocated.

public void removeIndexes()

This method is called when repair() is called from

org.exist.storage.NativeBroker.

repair() reconstructs every index (including the

structural one) from what is contained in the persistent DOM (usually

dom.dbx).

remove() will be called for every registered

Index. That allows each index to destroy its persistent storage

if it wants to do so (but it is probably suitable given that

repair() is called when the DB and/or its indexes are

corrupted).

public void reopenIndexes()

This method is called when repair() is called from

org.exist.storage.NativeBroker.

open() will be called for every registered

Index. That allows each index to (re)allocate the resources it

needs for its persistent storage.

org.exist.indexing.IndexController

Another important class is org.exist.indexing.IndexController

which, as its name suggests, controls the way data to be indexed are dispatched to

the registered indexes, using org.exist.indexing.IndexWorkers

that will be described below. Each org.exist.storage.DBBroker

constructs such an IndexController when it is itself constructed,

using this constructor:

public IndexController(DBBroker broker)

This registers the broker's IndexWorkers,

once for each registered Index. These

IndexWorkers, that will be described below, are returned by the

getWorker() method in

org.exist.indexing.Index, which is usually a good place to create

such an IndexWorker, at least the first time it is called.

This IndexController will be available through the

getIndexController() method of

org.exist.storage.DBBroker.

Here are the other public methods:

public Map configure(NodeList configNodes, Map namespaces)

This method receives the database's configuration object, usually defined in an

XML file called conf.xml. Both configuration nodes and namespaces

(remember that some configuration settings including e.g. pathes need namespaces to

be defined) will be passed to the configure() method of each

IndexWorker. The returned object is a

java.util.Map that will be available from

collection.getIndexConfiguration(broker).getCustomIndexSpec(INDEX_CLASS_IDENTIFIER).

public IndexWorker getWorkerByIndexId(String indexId)

A method that returns an IndexWorker given the class identifier

of its associated Index identifier. Very useful to the developer

since it allows custom functions to access IndexWorkers whatever

the human-defined name of their Index is. This is probably the

only method in this class that will be really needed by a developer.

public IndexWorker getWorkerByIndexName(String indexName)

The counterpart of the previous method. For the human-readable name of the

Index as defined in the configuration.

public void setDocument(DocumentImpl doc)

This method sets the org.exist.dom.DocumentImpl on which the

IndexWorkers shall work. Calls

setDocument(doc) on each registered

IndexWorker.

public void setMode(int mode)

This method sets the operating mode in which the IndexWorkers

shall work. See below for further details on operating modes. Calls

setMode(mode) on each registered

IndexWorker.

public void setDocument(DocumentImpl doc, int mode)

A convenience method that sets both the

org.exist.dom.DocumentImpl and the operating mode. Calls

setDocument(doc, mode) on each registered

IndexWorker.

public DocumentImpl getDocument()

Returns the org.exist.dom.DocumentImpl on which the

IndexWorkers will have to work.

public int getMode()

Returns the operating mode in which the IndexWorkers will have

to work.

public void flush()

Called in various places when pending operations, obviously data insertion, update

or removal, have to be completed. Calls flush() on each

registered IndexWorker.

public void removeCollection(Collection collection, DBBroker broker)

Called when a collection is to be removed. That allows to delete index entries for

this collection in a single operation. Calls removeCollection()

on each registered IndexWorker.

public void reindex(Txn transaction, StoredNode reindexRoot, int mode)

Called when a document is to be reindexed. Only the reindexRoot

node and its descendants will have their index entries updated or removed depending

of the mode parameter.

public StoredNode getReindexRoot(StoredNode node, NodePath path)

Determines the node which should be reindexed together with its descendants. Calls

getReindexRoot() on each registered

IndexWorker. The top-most node will be the actual node from which

the DBBroker will start reindexing.

public StoredNode getReindexRoot(StoredNode node, NodePath path, boolean includeSelf)

Same as above, with more parameters.

public StreamListener getStreamListener()

Returns the first org.exist.indexing.StreamListener in the

StreamListeners pipeline. There is at most one

StreamListener per IndexWorker that will

intercept the (re)indexed nodes stream. IndexWorkers that are not

interested in the data (depending of e.g. the document and/or the operating mode)

may return null through their getListener()

method and thus not participate in the (re)indexing process. In other terms, they

will not listen to the indexed nodes.

public void indexNode(Txn transaction, StoredNode node, NodePath path, StreamListener listener)

Index any kind of indexable node (currently elements, attributes and text nodes ; comments and especially processing instructions might be considered in the future).

public void startElement(Txn transaction, ElementImpl node, NodePath path, StreamListener listener)

More specific than indexNode(). For an element. Will call

startElement() on listener if it is not

null. This is similar to Java SAX events.

public void attribute(Txn transaction, AttrImpl node, NodePath path, StreamListener listener)

More specific than indexNode(). For an attribute. Will call

attribute() on listener if it is not

null.

public void characters(Txn transaction, TextImpl node, NodePath path, StreamListener listener)

More specific than indexNode(). For a text node. Will call

characters() on listener if it is not

null.

public void endElement(Txn transaction, ElementImpl node, NodePath path, StreamListener listener)

Signals end of indexing for an element node. Will call

endElement() on listener if it is not

null

public MatchListener getMatchListener(NodeProxy proxy)

Returns a org.exist.indexing.MatchListener for the given

node.

The two classes aim to be essentially used by eXist itself. As a programmer you will probably need to use just one or two of the above methods.

org.exist.indexing.Index and org.exist.indexing.AbstractIndex

Now let's get into the interfaces and classes that will need to be extended by the

index programmer. The first of them is the interface

org.exist.indexing.Index which will maintain the index

itself.

As described above, a new instance of the interface will be created by the

constructor of org.exist.indexing.IndexManager which calls the

interface's newInstance() method. No need for a constructor

then.

Here are the methods that have to be implemented in an implementation:

String getIndexId()

Returns the class identifier of the index.

String getIndexName()

Returns the human-defined name of the index, if one was defined in the configuration file.

BrokerPool getBrokerPool()

Returns the org.exist.storage.BrokerPool that has created the

index.

void configure(BrokerPool pool, String dataDir, Element config)

Notifies the Index a data directory (normally

${EXIST_HOME}/data) and the configuration element in which it is

declared.

void open()

Method that is executed when the Index is opened, whatever it

means. Consider this method as an initialization and allocate the necessary

resources here.

void close()

Method that is executed when the Index is closed, whatever it

means. Consider this method as a finalization and free the allocated resources

here.

void sync()

Unused.

void remove()

Method that is executed when eXist requires the index content to be entitrely deleted, e.g. before repairing a corrupted database.

IndexWorker getWorker(DBBroker broker)

Returns the IndexWorker that operates on this

Index on behalf of broker. One may want to

create a new IndexWorker here or pick one form a pool.

boolean checkIndex(DBBroker broker)

To be called by applications that want to implement a consistency check on the

Index.

There is also an abstract class that implements

org.exist.indexing.Index,

org.exist.indexing.AbstractIndex that can be used a a basis for

most Index implementations. Most of its methods are abstract and

still have to be implemented in the concrete classes. These are the few concrete

methods:

public String getDataDir()

Returns the directory in which this Index operates. Usually

defined by configure() which itself receives eXist's

configuration settings.

There might be some Indexes for which the concept of data

directory isn't accurate.

public void configure(BrokerPool pool, String dataDir, Element config)

Its minimal implementation retains the

org.exist.storage.BrokerPool, the data directory and the

human-defined name, if defined in the configuration file (in an attribute called

id). Sub-classes may call super.configure() to

retain this default behaviour.

This member is protected:

protected static String ID = "Give me an ID !"

This is where the class identifier of the Index is defined.

Override this member with, say, MyClass.class.getName() to

provide a reasonably unique identifier within your system.

org.exist.indexing.IndexWorker

The next important interface that will need to be implemented is

org.exist.indexing.IndexWorker which is responsible for managing

the data in the index. Remember that each

org.exist.storage.DBBroker will have such an

IndexWorker at its disposal and that their

IndexController will know what method of

IndexWorker to call and when to call it.

Here are the methods that have to be implemented in the concrete implementations:

public String getIndexId()

Returns the class identifier of the index.

public String getIndexName()

Returns the human-defined name of the index, if one was defined in the configuration file.

Object configure(IndexController controller, NodeList configNodes, Map namespaces)

This method receives the database's configuration object, usually defined in an

XML file called conf.xml. Both configuration nodes and namespaces

(remember that some configuration settings including e.g. pathes need namespaces to

be defined) will be passed to the configure() method of the

IndexWorker's IndexController. The

IndexWorker can use this method to retain custom configuration

options in a custom object that will be available in the

java.util.Map returned by

collection.getIndexConfiguration(broker).getCustomIndexSpec(INDEX_CLASS_IDENTIFIER).

The return type is free but will probably generally be an implementation of

java.util.Collection in order to retain several parameters.

void setDocument(DocumentImpl doc)

This method sets the org.exist.dom.DocumentImpl on which this

IndexWorker will have to work.

void setMode(int mode)

This method sets the operating mode in which this IndexWorker

will have to work. See below for further details on operating modes.

void setDocument(DocumentImpl doc, int mode)

A convenience method that sets both the

org.exist.dom.DocumentImpl and the operating mode.

DocumentImpl getDocument()

Returns the org.exist.dom.DocumentImpl on which this

IndexWorker will have to work.

int getMode()

Returns the operating mode in which this IndexWorker will have

to work.

void flush()

Called periodically by the IndexController or by any other

process. That is where data insertion, update or removal should actually take

place.

void removeCollection(Collection collection, DBBroker broker)

Called when a collection is to be removed. That allows to delete index entries for

this collection in a single operation without a need for a

StreamListener (see below) or a call to

setMode() nor setDocument().

StoredNode getReindexRoot(StoredNode node, NodePath path, boolean includeSelf)

Determines the node which should be reindexed together with its descendants. This

will give a hint to the IndexController to determine from which

node reindexing should start.

StreamListener getListener()

Returns a StreamListener that will intercept the (re)indexed

nodes stream. IndexWorkers that are not interested in the data

(depending of e.g. the document and/or the operating mode) may return

null here.

MatchListener getMatchListener(NodeProxy proxy)

Returns a org.exist.indexing.MatchListener for the given

node.

boolean checkIndex(DBBroker broker)

To be called by applications that want to implement a consistency check on the index.

Occurrences[] scanIndex(DocumentSet docs)

Returns an array of org.exist.dom.DocumentImpl.Occurrences that

is an ordered list of the index entries, in a textual form,

associated with the number of occurences for the entries and a list of the documents

containing them.

For some indexes, the concept of ordered or textual occurrences might not be meaningful.

org.exist.indexing.StreamListener and org.exist.indexing.AbstractStreamListener

The interface org.exist.indexing.StreamListener has these

public members:

public final static int UNKNOWN = -1;

public final static int STORE = 0;

public final static int REMOVE_ALL_NODES = 1;

public final static int REMOVE_SOME_NODES = 2;

Obviously, they are used by the setMode() method in

org.exist.indexing.IndexController which is istself called by the

different org.exist.storage.DBBrokers when they have to (re)index

a node and its descendants. As their name suggests, there is a mode for storing

nodes and two modes for removing them from the indexes. The difference between

StreamListener.REMOVE_ALL_NODES and

StreamListener.REMOVE_SOME_NODES is that the former removes all

the nodes from a document whereas the latter removes only some nodes from a

document, usually the descendants of the node returned by

getReindexRoot(). We thus have the opportunity to trigger a

process that will directly remove all the nodes from a given document without having

to listen to each of them. Such a technique is described below.

Here are the methods that must be implement by an implemetation:

IndexWorker getWorker()

Returns the IndexWorker that owns this

StreamListener.

void setNextInChain(StreamListener listener);

Should not be used. Used to specify which is the next

StreamListener in the IndexController's

StreamListeners pipeline.

StreamListener getNextInChain();

Returns the next StreamListener in the

IndexController's StreamListeners pipeline.

Very important because it is the responsability of the

StreamListener to forward the event stream to the next

StreamListener in the pipeline.

void startElement(Txn transaction, ElementImpl element, NodePath path)

Signals the start of an element to the listener.

void attribute(Txn transaction, AttrImpl attrib, NodePath path)

Passes an attribute to the listener.

void characters(Txn transaction, TextImpl text, NodePath path)

Passes some character data to the listener.

void endElement(Txn transaction, ElementImpl element, NodePath path)

Signals the end of an element to the listener. Allow to free any temporary

resource created since the matching startElement() has been

called.

Beside the StreamListener interface, each custom listener

should extend org.exist.indexing.AbstractStreamListener.

This abstract class provides concrete implementations for

setNextInChain() and getNextInChain() that

should normally never be overridden.

It also provides dummy startElement(),

attribute(), characters(),

endElement() methods that do nothing but forwarding the node to

the next StreamListener in the

IndexController's StreamListeners

pipeline.

public abstract IndexWorker getWorker()

These remain abstract, since we still can not know what

IndexWorker will own the Listener until we

have a concrete implementation.

Use case: developing an indexing architecture for GML geometries

To demonstrate how modular eXist Indexes are, we have decided to

show how a spatial Index could be implemented. What makes its design

interesting is that this kind of Index doesn't store character data

from the document, nor does it use a org.exist.storage.index.BFile to

store the index entries. Instead, we will store WKB index entries in a JDBC database,

namely a HSQLDB to keep the distribution as

light as possible and reduce the number of external dependencies, but it wouldn't be too

difficult to use another one like PostGIS given that the implementation has itself been designed in a quite

modular way.

In eXist's Git repository, the modularized Indexes code is in

extensions/indexes and the file system's architecture is designed to

follow eXist's core architecture, i.e. org.exist.indexing.* for the

Indexes and org.exist.xquery.* for their

associated Modules. There is also a dedicated location for required

external libraries and for the test cases. The build system should normally be able to

download the required libraries and build all the files automatically, and even launch

the tests provided that the DB's configuration file declares the

Indexes (see above) and their associated Modules

(see below).

The described spatial Index heavily relies on the excellent open

source librairies provided by the Geotools project. We have experienced a few problems that will be mentioned

further, but since feedback has been provided, the situation will unquestionably improve

in the future, making current workarounds redundant.

The Index has been tested with only one file which is available

from the Ordnance Survey

of Great-Britain, a topography layer of Port-Talbot, which is available as sample data.

Writing the concrete implementation of org.exist.indexing.AbstractIndex

Well, in fact we will start by writing an abstract implementation first. As said

above, we have planned a modular JDBC spatial Index, which will

be abstract, and that will be extended by a concrete HSQLDB

Index.

Let's start with this:

package org.exist.indexing.spatial;

public abstract class AbstractGMLJDBCIndex extends AbstractIndex {

public final static String ID = AbstractGMLJDBCIndex.class.getName();

private final static Logger LOG = Logger.getLogger(AbstractGMLJDBCIndex.class);

protected HashMap workers = new HashMap();

protected Connection conn = null;

}

Here we define an abstract class that extends

org.exist.indexing.AbstractIndex and thus implements

org.exist.indexing.Index. We also define a few members like

ID that will be returned by the unoverriden

getIndexId() from

org.exist.indexing.AbstractIndex, a Logger, a

java.util.HashMap that will be a "pool" of

IndexWorkers (one for each

org.exist.storage.DBBroker) and a

java.sql.Connection that will handle the database operations at

the index level.

Let' now introduce this general purpose interface:

public interface SpatialOperator {

public static int UNKNOWN = -1;

public static int EQUALS = 1;

public static int DISJOINT = 2;

public static int INTERSECTS = 3;

public static int TOUCHES = 4;

public static int CROSSES = 5;

public static int WITHIN = 6;

public static int CONTAINS = 7;

public static int OVERLAPS = 8;

}

This defines the spatial operators that will be used by spatial queries. For more information about the semantics, see the JTS documentation (chapter 11). We will use this wonderful library everytime a spatial computation is required. So does the Geotools project by the way.

Here are a few concrete methods that should be usable by any JDBC-enabled database:

public AbstractGMLJDBCIndex() {

}

public void configure(BrokerPool pool, String dataDir, Element config) throws DatabaseConfigurationException {

super.configure(pool, dataDir, config);

try {

checkDatabase();

} catch (ClassNotFoundException e) {

throw new DatabaseConfigurationException(e.getMessage());

} catch (SQLException e) {

throw new DatabaseConfigurationException(e.getMessage());

}

}

public void open() throws DatabaseConfigurationException {

}

public void close() throws DBException {

Iterator i = workers.values().iterator();

while (i.hasNext()) {

AbstractGMLJDBCIndexWorker worker = (AbstractGMLJDBCIndexWorker)i.next();

worker.flush();

worker.setDocument(null, StreamListener.UNKNOWN);

}

shutdownDatabase();

}

public void sync() throws DBException {

}

public void remove() throws DBException {

Iterator i = workers.values().iterator();

while (i.hasNext()) {

AbstractGMLJDBCIndexWorker worker = (AbstractGMLJDBCIndexWorker)i.next();

worker.flush();

worker.setDocument(null, StreamListener.UNKNOWN);

}

removeIndexContent();

shutdownDatabase();

deleteDatabase();

}

public boolean checkIndex(DBBroker broker) {

return getWorker(broker).checkIndex(broker);

}

First, an empty constructor, not even necessary since the Index

is created through the newInstance() method of its interface (see

above).

Then, a configuration method that calls its ancestor, whose behaviour fullfills

our needs. This method calls a checkDatabase() method whose

semantics will be dependant of the underlying DB. The basic idea is to prevent eXist

to continue its initialization if there is a problem with the DB.

Then we will do nothing during open(). No need to open a

database, which is costly, if we dont need it.

The close() will flush any pending operation currently queued

by the IndexWorkers and resets their state in order to prevent

them to start any further operation, which should never be possible if eXist is

their only user. Then it will call a shutdownDatabase() method

whose semantics will be dependant of the underlying DB. They can be fairly simple

for DBs that shut down automatically when the virtual machine shuts down.

The sync() is never called by eXist. It's here to make the

interface happy.

The remove() method is similar to close().

It then calls two database-dependant methods that are pretty redundant.

deleteDatabase() will probably not be able to do what its name

suggests if eXist doesn't own the admin rights. Conversely,

removeIndexContent() wiould probably have nothing to do if eXist

owns the admin rights since physically destroying the DB would probably be more

efficient than deleting table contents.

checkIndex() will delegate the task to the

broker's IndexWorker.

The remaining methods are DB-dependant and thus abstract:

public abstract IndexWorker getWorker(DBBroker broker); protected abstract void checkDatabase() throws ClassNotFoundException, SQLException; protected abstract void shutdownDatabase() throws DBException; protected abstract void deleteDatabase() throws DBException; protected abstract void removeIndexContent() throws DBException; protected abstract Connection acquireConnection(DBBroker broker) throws SQLException; protected abstract void releaseConnection(DBBroker broker) throws SQLException;

Let's see now how a HSQL-dependant implementation would be going by describing the concrete class:

package org.exist.indexing.spatial;

public class GMLHSQLIndex extends AbstractGMLJDBCIndex {

private final static Logger LOG = Logger.getLogger(GMLHSQLIndex.class);

public static String db_file_name_prefix = "spatial_index";

public static String TABLE_NAME = "SPATIAL_INDEX_V1";

private DBBroker connectionOwner = null;

private long connectionTimeout = 100000L;

public GMLHSQLIndex() {

}

}

Of course, we extend

org.exist.indexing.spatial.AbstractGMLJDBCIndex, then a few

members are defined: a Logger, a file prefix (which will be

required by the files required by HSQLDB storage, namely

spatial_index.lck, spatial_index.log,

spatial_index.script and

spatial_index.properties), then a table name in which the spatial

index data will be stored, then a variable that will hold the

org.exist.storage.DBBroker that currently holds a connection to

the DB (we could have used an IndexWorker here, given their 1:1

relationship). The problem is that we run HSQLDB in embedded mode and that only one

connection is available at a given time.

A more elaborated DBMS, or HSQLDB running in server mode would permit the

allocation of one connection per IndexWorker, but we have chosen

to keep things simple for now. Indeed, if IndexWorkers are

thread-safe (because each org.exist.storage.DBBroker operates

within its own thread), a single connection will have to be controlled by the

Index which is controlled by the

org.exist.storage.BrokerPool. See below how we will handle

concurrency, given such perequisites.

The last member is the timeout when a Connection to the DB is

requested.

As we can see, we have an empty constructor again.

The next method calls its ancestor's configure() method and

just retains the content of the connectionTimeout attribute as

defined in the configuration file.

public void configure(BrokerPool pool, String dataDir, Element config)

throws DatabaseConfigurationException {

super.configure(pool, dataDir, config);

String param = ((Element)config).getAttribute("connectionTimeout");

if (param != null) {

try {

connectionTimeout = Long.parseLong(param);

} catch (NumberFormatException e) {

LOG.error("Invalid value for 'connectionTimeout'", e);

}

}

}

The next method is also quite straightforward:

public IndexWorker getWorker(DBBroker broker) {

GMLHSQLIndexWorker worker = (GMLHSQLIndexWorker)workers.get(broker);

if (worker == null) {

worker = new GMLHSQLIndexWorker(this, broker);

workers.put(broker, worker);

}

return worker;

}

This picks an IndexWorker (more precisely a

org.exist.indexing.spatial.GMLHSQLIndexWorker that will be

described below) for the given broker from the "pool". If needed,

namely the first time the method is called with with parameter, it creates one.

Notice that this IndexWorker is DB-dependant. It will be

described below.

Then come a few general-purpose methods:

protected void checkDatabase()

throws ClassNotFoundException, SQLException {

Class.forName("org.hsqldb.jdbcDriver");

}

protected void shutdownDatabase()

throws DBException {

try {

if (conn != null) {

Statement stmt = conn.createStatement();

stmt.executeQuery("SHUTDOWN");

stmt.close();

conn.close();

if (LOG.isDebugEnabled())

LOG.debug("GML index: " + getDataDir() + "/" + db_file_name_prefix + " closed");

}

} catch (SQLException e) {

throw new DBException(e.getMessage());

} finally {

conn = null;

}

}

protected void deleteDatabase()

throws DBException {

File directory = new File(getDataDir());

File[] files = directory.listFiles(

new FilenameFilter() {

public boolean accept(File dir, String name) {

return name.startsWith(db_file_name_prefix);

}

}

);

boolean deleted = true;

for (int i = 0; i < files.length ; i++) {

deleted &= files[i].delete();

}

}

protected void removeIndexContent()

throws DBException {

try {

//Let's be lazy here : we only delete th index content if we have a connection

if (conn != null) {

Statement stmt = conn.createStatement();

int nodeCount = stmt.executeUpdate("DELETE FROM " + GMLHSQLIndex.TABLE_NAME + ";");

stmt.close();

if (LOG.isDebugEnabled())

LOG.debug("GML index: " + getDataDir() + "/" + db_file_name_prefix + ". " +

nodeCount + " nodes removed");

}

} catch (SQLException e) {

throw new DBException(e.getMessage());

}

}

checkDatabase() just checks that we have a suitable driver in

the CLASSPATH. We don't want to open the database right now. It costs too

much.

shutdownDatabase() is just one of the many ways to shutdown a

HSQLDB.

deleteDatabase() is just a file system management problem ;

remember that the database should be closed at that moment no file locking

issues.

removeIndexContent() deletes the table that contains spatial

data. As explained above, this is less efficient than deleting the whole database

though!

The two next methods are totally JDBC-specific and, given the way they are

implemented, are totally embedded HSQLDB-specific. The current

code is directly adapted from

org.exist.storage.lock.ReentrantReadWriteLock to show that

connection management should be severely controlled given the concurrency context

induced by using many org.exist.storage.DBBroker. Despite the

fact DBBrokers are thread-safe, access to

shared storage must be concurrential, in particular when

flush() is called.

org.exist.storage.index.BFile users would call

getLock() to acquire and release locks on the index files. Our

solution is thus very similar.

However, since most JDBC databases are able to work in a concurrential context, it

would then be better to never call these Index-level methods from

the IndexWorkers and let each IndexWorker

handle its connection to the underlying DB.

protected Connection acquireConnection(DBBroker broker)

throws SQLException {

synchronized (this) {

if (connectionOwner == null) {

connectionOwner = broker;

if (conn == null)

initializeConnection();

return conn;

} else {

long waitTime = connectionTimeout;

long waitTime = timeOut_;

long start = System.currentTimeMillis();

try {

for (;;) {

wait(waitTime);

if (connectionOwner == null) {

connectionOwner = broker;

if (conn == null)

//We should never get there since the connection should have been initialized

//by the first request from a worker

initializeConnection();

return conn;

} else {

waitTime = timeOut_ - (System.currentTimeMillis() - start);

if (waitTime <= 0) {

LOG.error("Time out while trying to get connection");

}

}

}

} catch (InterruptedException ex) {

notify();

throw new RuntimeException("interrupted while waiting for lock");

}

}

}

}

protected synchronized void releaseConnection(DBBroker broker)

throws SQLException {

if (connectionOwner == null)

throw new SQLException("Attempted to release a connection that wasn't acquired");

connectionOwner = null;

}

acquireConnection() acquires an exclusive

JDBC Connection to the storage engine for an

IndexWorker (or a org.exist.storage.DBBroker,

which roughly means the same thing). This is where a Connection

is created if necessary (see below) and makes the first connection's performance

cost due only when needed.

releaseConnection() marks the connection as being unused. It

will thus become available when requested again.

The last method concentrates the index-level DB-dependant code in just one place

(removeIndexContent() is relatively DB-independant).

private void initializeConnection()

throws SQLException {

System.setProperty("hsqldb.cache_scale", "11");

System.setProperty("hsqldb.cache_size_scale", "12");

System.setProperty("hsqldb.default_table_type", "cached");

//Get a connection to the DB... and keep it

this.conn = DriverManager.getConnection("jdbc:hsqldb:" + getDataDir() + "/" + db_file_name_prefix, "sa", "");

try {

ResultSet rs = this.conn.getMetaData().getTables(null, null, TABLE_NAME, new String[] { "TABLE" });

rs.last();

if (rs.getRow() == 1) {

if (LOG.isDebugEnabled())

LOG.debug("Opened GML index: " + getDataDir() + "/" + db_file_name_prefix);

//Create the data structure if it doesn't exist

} else if (rs.getRow() == 0) {

Statement stmt = conn.createStatement();

stmt.executeUpdate("CREATE TABLE " + TABLE_NAME + "(" +

/*1*/ "DOCUMENT_URI VARCHAR, " +

/*2*/ "NODE_ID_UNITS INTEGER, " +

/*3*/ "NODE_ID BINARY, " +

...

/*26*/ "IS_VALID BOOLEAN, " +

//Enforce uniqueness

"UNIQUE (" +

"DOCUMENT_URI, NODE_ID_UNITS, NODE_ID" +

")" +

")"

);

stmt.executeUpdate("CREATE INDEX DOCUMENT_URI ON " + TABLE_NAME + " (DOCUMENT_URI);");

...

stmt.close();

if (LOG.isDebugEnabled())

LOG.debug("Created GML index: " + getDataDir() + "/" + db_file_name_prefix);

} else {

throw new SQLException("2 tables with the same name ?");

}

} finally {

if (rs != null)

rs.close();

}

}

This method opens a Connection and, if it is a new one

(the new one since we only have one), checks that we have a SQL

table for the spatial data. If not, i.e. if the spatial index doesn't exist yet, a

table is created with the following structure:

|

Field name |

Field type |

Description |

Comments |

|---|---|---|---|

|

DOCUMENT_URI |

VARCHAR |

The document's URI |

|

|

NODE_ID_UNITS |

INTEGER |

The number of useful bits in NODE_ID |

See below |

|

NODE_ID |

BINARY |

The node ID, as a byte array |

See above. Only some bits might be considered due to obvious data alignment requirements |

|

GEOMETRY_TYPE |

VARCHAR |

The geometry type |

As returned by the JTS |

|

SRS_NAME |

VARCHAR |

The SRS of the geometry |

|

|

WKT |

VARCHAR |

The Well-Known Text representation of the geometry |

|

|

WKB |

BINARY |

The WKB representation of the geometry |

|

|

MINX |

DOUBLE |

The minimal X of the geometry |

|

|

MAXX |

DOUBLE |

The maximal X of the geometry |

|

|

MINY |

DOUBLE |

The minimal Y of the geometry |

|

|

MAXY |

DOUBLE |

The maximal Y of the geometry |

|

|

CENTROID_X |

DOUBLE |

The X of the geometry's centroid |

|

|

CENTROID_Y |

DOUBLE |

The Y of the geometry's centroid |

|

|

AREA |

DOUBLE |

The area of the geometry |

Expressed in the measure defined in its SRS |

|

EPSG4326_WKT |

VARCHAR |

The WKT representation of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_WKB |

BINARY |

The WKB representation of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_MINX |

DOUBLE |

The minimal X of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_MAXX |

DOUBLE |

The maximal X of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_MINY |

DOUBLE |

The minimal Y of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_MAXY |

DOUBLE |

The maximal Y of the geometry |

In the epsg:4326 SRS |

|

EPSG4326_CENTROID_X |

DOUBLE |

The X of the geometry's centroid |

In the epsg:4326 SRS |

|

EPSG4326_CENTROID_Y |

DOUBLE |

The Y of the geometry's centroid |

In the epsg:4326 SRS |

|

EPSG4326_AREA |

DOUBLE |

The area of the geometry |

In the epsg:4326 SRS (measure unknown, to be clarified) |

|

IS_CLOSED |

BOOLEAN |

Whether or not this geometry is "closed" |

See the JTS documentation (chapter 13) |

|

IS_SIMPLE |

BOOLEAN |

Whether or not this geometry is "simple" |

See the JTS documentation (chapter 13) |

|

IS_VALID |

BOOLEAN |

Whether or not this geometry is "valid" |

See the JTS documentation (chapter 13). Should always be TRUE |

Uniqueness will be enforced on a (DOCUMENT_URI, NODE_ID_UNITS,

NODE_ID) basis. Indeed, we can have at most one index entry for a given

node in a given document.

Also, indexes are created on these fields to help queries:

-

DOCUMENT_URI

-

NODE_ID

-

GEOMETRY_TYPE

-

WKB

-

EPSG4326_WKB

-

EPSG4326_MINX

-

EPSG4326_MAXX

-

EPSG4326_MINY

-

EPSG4326_MAXY

-

EPSG4326_CENTROID_X

-

EPSG4326_CENTROID_Y

Every geometry will be internally stored in both its original SRS and in the epsg:4326 SRS. Having this kind of common, world-wide applicable, SRS for all geometries in the index allows to make operations on them even if they are originally defined in different SRSes.

Important:

By default, eXist's build will download the lightweight

gt2-epsg-wkt-XXX.jar library which lacks some parameters, the

Bursa-Wolf ones. A better accuracy for geographic transformations might

be obtained by using a heavier library like

gt2-epsg-hsql-XXX.jar which is documented here.

Writing the concrete implementation of org.exist.indexing.IndexWorker

Just like for org.exist.indexing.spatial.AbstractGMLJDBCIndex,

we will start to design a database-independant abstract class. This class should

normally be the basis of every JDBC spatial index. It will handle most of the hard

work.

Let's start by a few members and a few general-purpose public methods:

package org.exist.indexing.spatial;

public abstract class AbstractGMLJDBCIndexWorker implements IndexWorker {

public static String GML_NS = "http://www.opengis.net/gml";

protected final static String INDEX_ELEMENT = "gml";

private static final Logger LOG = Logger.getLogger(AbstractGMLJDBCIndexWorker.class);

protected IndexController controller;

protected AbstractGMLJDBCIndex index;

protected DBBroker broker;

protected int currentMode = StreamListener.UNKNOWN;

protected DocumentImpl currentDoc = null;

private boolean isDocumentGMLAware = false;

protected Map geometries = new TreeMap();

NodeId currentNodeId = null;

Geometry streamedGeometry = null;

boolean documentDeleted = false;

int flushAfter = -1;

protected GMLHandlerJTS geometryHandler = new GeometryHandler();

protected GMLFilterGeometry geometryFilter = new GMLFilterGeometry(geometryHandler);

protected GMLFilterDocument geometryDocument = new GMLFilterDocument(geometryFilter);

protected TreeMap transformations = new TreeMap();

protected boolean useLenientMode = false;

protected GMLStreamListener gmlStreamListener = new GMLStreamListener();

protected GeometryCoordinateSequenceTransformer coordinateTransformer = new GeometryCoordinateSequenceTransformer();

protected GeometryTransformer gmlTransformer = new GeometryTransformer();

protected WKBWriter wkbWriter = new WKBWriter();

protected WKBReader wkbReader = new WKBReader();

protected WKTWriter wktWriter = new WKTWriter();

protected WKTReader wktReader = new WKTReader();

protected Base64Encoder base64Encoder = new Base64Encoder();

protected Base64Decoder base64Decoder = new Base64Decoder();

public AbstractGMLJDBCIndexWorker(AbstractGMLJDBCIndex index, DBBroker broker) {

this.index = index;

this.broker = broker;

}

public String getIndexId() {

return AbstractGMLJDBCIndex.ID;

}

public String getIndexName() {

return index.getIndexName();

}

public Index getIndex() {

return index;

}

}

Of course,

org.exist.indexing.spatial.AbstractGMLJDBCIndexWorker implements

org.exist.indexing.IndexWorker.

GML_NS is the GML namespace for which the spatial index is

specially designed. Use this public member to avoid redundancy and, worse,

inconsistencies.

INDEX_ELEMENT is the configuration's element name which is

accurate for our Index configuration. To configure a collection

in order to index its GML data, define such a configuration:

<collection xmlns="http://exist-db.org/collection-config/1.0">

<index>

<gml flushAfter="200"/>

</index>

</collection>Got the <gml> element? We will shortly see how this information is able

to configure our IndexWorker.

controller, index and

broker should now be quite straightforward.

currentMode and currentDoc should also be

straightforward.

geometries is a collection of

com.vividsolutions.jts.geom.Geometry instances that are currently

held in memory, waiting for being "flushed" to the database. Depending of

currentMode, they're pending for insertion or removal.

currentNodeId is used to share the ID of the node currently

being processed between the different inner classes.

streamedGeometry is the last

com.vividsolutions.jts.geom.Geometry that has been generated by

GML parsing. It is null if the geometry is topologically not well

formed. This latter case is maybe a too restrictive feature of Geotools parser which

also throws NullPointerExceptions (!) if the GML is somehow not

well-formed. See GEOT-742 for more information on this issue.

documentDeleted is a flag indicating that the current document

has been deleted and that we don't have to process it any more. Remember that

StreamListener.REMOVE_ALL_NODES send some events for

all nodes.

flushAfter will hold our configuration's setting.

geometryHandler is our GML geometries SAX handler that will

convert GML to a com.vividsolutions.jts.geom.Geometry instance.

It is included in a handler chain composed of geometryFilter and

geometryDocument.

transforms will cache a list a transformations between a

source and a target SRS.

useLenientMode will be set to true is the

transformation libraries that are in the CLASSPATH don't have the Bursa-Wolf

parameters. Transformations will be attempted, but with a precision loss (see

above).

gmlStreamListener is our own implementation of

org.exist.indexing.StreamListener. Since there is a 1:1 (or even

1:0) relationship with the IndexWorker, it will be implemented as

an inner class and will be described below.

coordinateTransformer will be needed during

Geometry transformations to other SRSes.

gmlTransformer will be needed during

Geometry transformations to XML.

wkbWriter and wkbReader will be needed

during Geometry serialization and deserialization to and from the

database.

wktWriter and wktReader will be needed

during Geometry WKT serialization and deserialization to and from

the database. WKT could be dynamically generated from Geometry

but we have chosen to store it in the HSQLDB.

base64Encoder and base64Decoder will be

needed to convert binary date, namely WKB, to XML types, namely

xs:base64Binary.

No need to comment the methods, expect maybe getIndexId() that

will return the static ID of the Index. No

chance to be wrong with such a design.

The next method is a bit specific:

public Object configure(IndexController controller, NodeList configNodes, Map namespaces)

throws DatabaseConfigurationException {

this.controller = controller;

Map map = null;

for(int i = 0; i < configNodes.getLength(); i++) {

Node node = configNodes.item(i);

if (node.getNodeType() == Node.ELEMENT_NODE && INDEX_ELEMENT.equals(node.getLocalName())) {

map = new TreeMap();

GMLIndexConfig config = new GMLIndexConfig(namespaces, (Element)node);

map.put(AbstractGMLJDBCIndex.ID, config);

}

}

return map;

}

It is only interested in the <gml> element of the configuration. If it

finds one, it creates a org.exist.indexing.spatial.GMLIndexConfig

instance wich is a very simple class:

package org.exist.indexing.spatial;

public class GMLIndexConfig {

private static final Logger LOG = Logger.getLogger(GMLIndexConfig.class);

private final static String FLUSH_AFTER = "flushAfter";

private int flushAfter = -1;

public GMLIndexConfig(Map namespaces, Element node)

throws DatabaseConfigurationException {

String param = ((Element)node).getAttribute(FLUSH_AFTER);

if (param != null && !"".equals(param)) {) {

try {

flushAfter = Integer.parseInt(param);

} catch (NumberFormatException e) {

LOG.info("Invalid value for '" + FLUSH_AFTER + "'", e);

}

}

}

public int getFlushAfter() {

return flushAfter;

}

}

This retains the configuration attribute and provides a getter for it.

This configuration object is saved in a Map with the Index ID

and will be available as shown in the next method:

public void setDocument(DocumentImpl document) {

isDocumentGMLAware = false;

documentDeleted= false;

if (document != null) {

IndexSpec idxConf = document.getCollection().getIndexConfiguration(document.getBroker());

if (idxConf != null) {

Map collectionConfig = (Map) idxConf.getCustomIndexSpec(AbstractGMLJDBCIndex.ID);

if (collectionConfig != null) {

isDocumentGMLAware = true;

if (collectionConfig.get(AbstractGMLJDBCIndex.ID) != null)

flushAfter = ((GMLIndexConfig)collectionConfig.

get(AbstractGMLJDBCIndex.ID)).getFlushAfter();

}

}

}

if (isDocumentGMLAware) {

currentDoc = document;

} else {

currentDoc = null;

currentMode = StreamListener.UNKNOWN;

}

}

The objective is to determine if document should be indexed by

the spatial Index.

For this, we look up its collection configuration and try to find a "custom" index

specification for our Index. If one is found, our

document will be processed by the IndexWorker.

We also take advantage of this process to set one of our members. If

document doesn't interest our IndexWorker, we

reset some members to avoid having an inconsistent sticky state.

The next methods don't require any particular comment:

public void setDocument(DocumentImpl doc, int mode) {

setDocument(doc);

setMode(mode);

}

public DocumentImpl getDocument() {

return currentDoc;

}

public int getMode() {

return currentMode;

}

The next method is somehow tricky:

public StreamListener getListener() {

if (currentDoc == null || currentMode == StreamListener.REMOVE_ALL_NODES)

return null;

return gmlStreamListener;

}

It doesn't return any StreamListener in the

StreamListner.REMOVE_ALL_NODES. It would be totally unnecessary

to listen at every node whereas a JDBC database will be able to delete all the

document's nodes in one single statement.

The next method is a place holder that needs more thinking. How to highlight a geometric information smartly?

public MatchListener getMatchListener(NodeProxy proxy) {

return null;

}

The next method computes the reindexing root. We will go bottom-up form the not to

be modified until the top-most element in the GML namespace. Indeed, GML allows

"nested" or "multi" geometries. If a single part of such Geometry

is modified, the whole geometry has to be recomputed.

public StoredNode getReindexRoot(StoredNode node, NodePath path, boolean includeSelf) {

if (!isDocumentGMLAware)

//Not concerned

return null;

StoredNode topMost = node;

StoredNode currentNode = node;

for (int i = path.length() ; i > 0; i--) {

currentNode = (StoredNode)currentNode.getParentNode();

if (GML_NS.equals(currentNode.getNamespaceURI()))

topMost = currentNode;

}

return topMost;

}

The next method delegates the write operations:

public void flush() {

if (!isDocumentGMLAware)

//Not concerned

return;

//Is the job already done ?

if (currentMode == StreamListener.REMOVE_ALL_NODES && documentDeleted)

return;

Connection conn = null;

try {

conn = acquireConnection();

conn.setAutoCommit(false);

switch (currentMode) {

case StreamListener.STORE :

saveDocumentNodes(conn);

break;

case StreamListener.REMOVE_SOME_NODES :

dropDocumentNode(conn);

break;

case StreamListener.REMOVE_ALL_NODES:

removeDocument(conn);

documentDeleted = true;

break;

}

conn.commit();

} catch (SQLException e) {

LOG.error("Document: " + currentDoc + " NodeID: " + currentNodeId, e);

try {

conn.rollback();

} catch (SQLException ee) {

LOG.error(ee);

}

} finally {

try {

if (conn != null) {

conn.setAutoCommit(true);

releaseConnection(conn);

}

} catch (SQLException e) {

LOG.error(e);

}

}

}

Even though its code looks thick, it proves to be a good way to acquire (then

release) a Connection whatever the way it is provided by the

IndexWorker (see above for these aspects, concurrency in

particular). It then delegates the write operations to dedicated methods, which do

not have to care about the Connection. Write operations are

embedded in a transaction. Should an exception occur, it would be logged and

swallowed: eXist doesn't like exceptions when it flushes its data.

The next method delegates node storage:

private void saveDocumentNodes(Connection conn) throws SQLException {

if (geometries.size() == 0)

return;

try {

for (Iterator iterator = geometries.entrySet().iterator(); iterator.hasNext();) {

Map.Entry entry = (Map.Entry) iterator.next();

NodeId nodeId = (NodeId)entry.getKey();

SRSGeometry srsGeometry = (SRSGeometry)entry.getValue();

try {

saveGeometryNode(srsGeometry.getGeometry(), srsGeometry.getSRSName(),

currentDoc, nodeId, conn);

} finally {

//Help the garbage collector

srsGeometry = null;

}

}

} finally {

geometries.clear();

}

}

It will call saveGeometryNode() (see below) passing a container

inner class that will not be described given its simplicity.

The next two methods are built with the same design. The first one destroys the index entry for the currently processed node and the second one removes the index entries for the whole document.

private void dropDocumentNode(Connection conn)

throws SQLException {

if (currentNodeId == null)

return;

try {

boolean removed = removeDocumentNode(currentDoc, currentNodeId, conn);

if (!removed)

LOG.error("No data dropped for node " + currentNodeId.toString() + " from GML index");

else {

if (LOG.isDebugEnabled())

LOG.debug("Dropped data for node " + currentNodeId.toString() + " from GML index");

}

} finally {

currentNodeId = null;

}

}

private void removeDocument(Connection conn)

throws SQLException {

if (LOG.isDebugEnabled())

LOG.debug("Dropping GML index for document " + currentDoc.getURI());

int nodeCount = removeDocument(currentDoc, conn);

if (LOG.isDebugEnabled())

LOG.debug("Dropped " + nodeCount + " nodes from GML index");

}

The next method is a mix of the designs described above. It also previously makes a check:

public void removeCollection(Collection collection, DBBroker broker) {

boolean isCollectionGMLAware = false;

IndexSpec idxConf = collection.getIndexConfiguration(broker);

if (idxConf != null) {

Map collectionConfig = (Map) idxConf.getCustomIndexSpec(AbstractGMLJDBCIndex.ID);

if (collectionConfig != null) {

isCollectionGMLAware = (collectionConfig != null);

}

}

if (!isCollectionGMLAware)

return;

Connection conn = null;

try {

conn = acquireConnection();

if (LOG.isDebugEnabled())

LOG.debug("Dropping GML index for collection " + collection.getURI());

int nodeCount = removeCollection(collection, conn);

if (LOG.isDebugEnabled())

LOG.debug("Dropped " + nodeCount + " nodes from GML index");

} catch (SQLException e) {

LOG.error(e);

} finally {

try {

if (conn != null)

releaseConnection(conn);

} catch (SQLException e) {

LOG.error(e);

}

}

}

Indeed, we have to check if the collection is indexable by the

Index before trying to delete its index entries.

The next methods are built on the same design (Collection and

exception management) and will thus not be described.

public NodeSet search(DBBroker broker, NodeSet contextSet, Geometry EPSG4326_geometry, int spatialOp)

...

}

public Geometry getGeometryForNode(DBBroker broker, NodeProxy p, boolean getEPSG4326) throws SpatialIndexException {

...

}

protected Geometry[] getGeometriesForNodes(DBBroker broker, NodeSet contextSet, boolean getEPSG4326) throws SpatialIndexException {

...

}

public AtomicValue getGeometricPropertyForNode(DBBroker broker, NodeProxy p, String propertyName)

throws SpatialIndexException {

...

}

public ValueSequence getGeometricPropertyForNodes(DBBroker broker, NodeSet contextSet, String propertyName)

throws SpatialIndexException {

...

}

All these methods delegate to the following abstract methods that will have to be implemented by the DB-dependant concrete classes:

protected abstract boolean saveGeometryNode(Geometry geometry, String srsName, DocumentImpl doc, NodeId nodeId, Connection conn)

throws SQLException;

protected abstract boolean removeDocumentNode(DocumentImpl doc, NodeId nodeID, Connection conn)

throws SQLException;

protected abstract int removeDocument(DocumentImpl doc, Connection conn)

throws SQLException;

protected abstract int removeCollection(Collection collection, Connection conn)

throws SQLException;

protected abstract Connection acquireConnection() throws SQLException;

protected abstract void releaseConnection(Connection conn) throws SQLException;

protected abstract NodeSet search(DBBroker broker, NodeSet contextSet, Geometry EPSG4326_geometry, int spatialOp, Connection conn)

throws SQLException;

protected abstract Map getGeometriesForDocument(DocumentImpl doc, Connection conn)

throws SQLException;

protected abstract AtomicValue getGeometricPropertyForNode(DBBroker broker, NodeProxy p, Connection conn,

String propertyName) throws SQLException, XPathException;

protected abstract ValueSequence getGeometricPropertyForNodes(DBBroker broker, NodeSet contextSet, Connection conn, String propertyName)

throws SQLException, XPathException;

protected abstract Geometry getGeometryForNode(DBBroker broker, NodeProxy p, boolean getEPSG4326, Connection conn)

throws SQLException;

protected abstract Geometry[] getGeometriesForNodes(DBBroker broker, NodeSet contextSet, boolean getEPSG4326, Connection conn)

throws SQLException;

protected abstract boolean checkIndex(DBBroker broker, Connection conn)

throws SQLException, SpatialIndexException;

Let's have a look however at this method that doesn't need a DB-dependant implementation:

public Occurrences[] scanIndex(DocumentSet docs) {

Map occurences = new TreeMap();

Connection conn = null;

try {

conn = acquireConnection();

//Collect the (normalized) geometries for each document

for (Iterator iDoc = docs.iterator(); iDoc.hasNext();) {

DocumentImpl doc = (DocumentImpl)iDoc.next();

//TODO : check if document is GML-aware ?

//Aggregate the occurences between different documents

for (Iterator iGeom = getGeometriesForDocument(doc, conn).entrySet().iterator(); iGeom.hasNext();) {

Map.Entry entry = (Map.Entry) iGeom.next();

Geometry key = (Geometry)entry.getKey();

//Do we already have an occurence for this geometry ?

Occurrences oc = (Occurrences)occurences.get(key);

if (oc != null) {

//Yes : increment occurence count

oc.addOccurrences(oc.getOccurrences() + 1);

//...and reference the document

oc.addDocument(doc);

} else {

//No : create a new occurence with EPSG4326_WKT as "term"

oc = new Occurrences((String)entry.getValue());

//... with a count set to 1

oc.addOccurrences(1);

//... and reference the document

oc.addDocument(doc);

occurences.put(key, oc);

}

}

}

} catch (SQLException e) {

LOG.error(e);

return null;

} finally {

try {

if (conn != null)

releaseConnection(conn);

} catch (SQLException e) {

LOG.error(e);

return null;

}

}

Occurrences[] result = new Occurrences[occurences.size()];

occurences.values().toArray(result);

return result;

}

Same design (Collection and exception management, delegation

mechanism). We probably will add more like this in the future.

The following methods are utility methods to stream Geometry

instances to XML and vice-versa.

public Geometry streamNodeToGeometry(XQueryContext context, NodeValue node)

throws SpatialIndexException {

try {

context.pushDocumentContext();

try {

node.toSAX(context.getBroker(), geometryDocument, null);

} finally {

context.popDocumentContext();

}

} catch (SAXException e) {

throw new SpatialIndexException(e);

}

return streamedGeometry;

}

public Element streamGeometryToElement(Geometry geometry, String srsName, Receiver receiver)

throws SpatialIndexException {

String gmlString = null;

try {

gmlString = gmlTransformer.transform(geometry);

} catch (TransformerException e) {

throw new SpatialIndexException(e);

}

try {

SAXParserFactory factory = SAXParserFactory.newInstance();

factory.setNamespaceAware(true);

InputSource src = new InputSource(new StringReader(gmlString));

SAXParser parser = factory.newSAXParser();

XMLReader reader = parser.getXMLReader();

reader.setContentHandler((ContentHandler)receiver);

reader.parse(src);

Document doc = receiver.getDocument();

return doc.getDocumentElement();

} catch (ParserConfigurationException e) {

throw new SpatialIndexException(e);

} catch (SAXException e) {

throw new SpatialIndexException(e);

} catch (IOException e) {

throw new SpatialIndexException(e);

}

}

The first one uses a org.geotools.gml.GMLFilterDocument (see

below) and the second one uses a

org.geotools.gml.producer.GeometryTransformer which needs some

polishing because, despite it is called a transformer, it doesn't cope easily with a

Handler and returns a... String ! See GEOT-1315.

The last method is also a utility method:

public Geometry transformGeometry(Geometry geometry, String sourceCRS, String targetCRS)

throws SpatialIndexException {

if ("osgb:BNG".equalsIgnoreCase(sourceCRS.trim()))

sourceCRS = "EPSG:27700";

if ("osgb:BNG".equalsIgnoreCase(targetCRS.trim()))

targetCRS = "EPSG:27700";

MathTransform transform = (MathTransform)transformations.get(sourceCRS + "_" + targetCRS);

if (transform == null) {

try {

try {

transform = CRS.findMathTransform(CRS.decode(sourceCRS), CRS.decode(targetCRS), useLenientMode);

} catch (OperationNotFoundException e) {

LOG.info(e);

LOG.info("Switching to lenient mode... beware of precision loss !");

useLenientMode = true;

transform = CRS.findMathTransform(CRS.decode(sourceCRS), CRS.decode(targetCRS), useLenientMode);

}

transformations.put(sourceCRS + "_" + targetCRS, transform);

LOG.debug("Instantiated transformation from '" + sourceCRS + "' to '" + targetCRS + "'");

} catch (NoSuchAuthorityCodeException e) {

LOG.error(e);

} catch (FactoryException e) {

LOG.error(e);

}

}

if (transform == null) {

throw new SpatialIndexException("Unable to get a transformation from '" + sourceCRS + "' to '" + targetCRS +"'");

}

coordinateTransformer.setMathTransform(transform);

try {

return coordinateTransformer.transform(geometry);

} catch (TransformException e) {

throw new SpatialIndexException(e);

}

}

It implements a workaround for our test file SRS which isn't yet known by Geotools

libraries (see GEOT-1307), then it tries to get the transformation from our cache. If it

doesn't succeed, it tries to find one in the libraries that are in the CLASSPATH.

Should those libraries lack the Bursa-Wolf parameters, it will make another attempt

in lenient mode, which will induce a loss of accuracy. Then, it transforms the

Geometry from its sourceCRS to the required

targetCRS.

Now, let's study how the abstract methods are implement by the HSQLDB-dependant class:

package org.exist.indexing.spatial;

public class GMLHSQLIndexWorker extends AbstractGMLJDBCIndexWorker {

private static final Logger LOG = Logger.getLogger(GMLHSQLIndexWorker.class);

public GMLHSQLIndexWorker(GMLHSQLIndex index, DBBroker broker) {

super(index, broker);

}

}

The only noticeable point is that we indeed extend our

org.exist.indexing.spatial.AbstractGMLJDBCIndexWorker

Now, this method will do something more interesting, store the

Geometry associated to a node:

protected boolean saveGeometryNode(Geometry geometry, String srsName, DocumentImpl doc, NodeId nodeId, Connection conn)

throws SQLException {

PreparedStatement ps = conn.prepareStatement("INSERT INTO " + GMLHSQLIndex.TABLE_NAME + "(" +

/*1*/ "DOCUMENT_URI, " +

/*2*/ "NODE_ID_UNITS, " +

/*3*/ "NODE_ID, " +

/*4*/ "GEOMETRY_TYPE, " +

/*5*/ "SRS_NAME, " +

/*6*/ "WKT, " +

/*7*/ "WKB, " +

/*8*/ "MINX, " +

/*9*/ "MAXX, " +

/*10*/ "MINY, " +

/*11*/ "MAXY, " +

/*12*/ "CENTROID_X, " +

/*13*/ "CENTROID_Y, " +

/*14*/ "AREA, " +

//Boundary ?

/*15*/ "EPSG4326_WKT, " +

/*16*/ "EPSG4326_WKB, " +

/*17*/ "EPSG4326_MINX, " +

/*18*/ "EPSG4326_MAXX, " +

/*19*/ "EPSG4326_MINY, " +

/*20*/ "EPSG4326_MAXY, " +

/*21*/ "EPSG4326_CENTROID_X, " +

/*22*/ "EPSG4326_CENTROID_Y, " +

/*23*/ "EPSG4326_AREA," +

//Boundary ?

/*24*/ "IS_CLOSED, " +

/*25*/ "IS_SIMPLE, " +

/*26*/ "IS_VALID" +

") VALUES (" +

"?, ?, ?, ?, ?, " +

"?, ?, ?, ?, ?, " +

"?, ?, ?, ?, ?, " +

"?, ?, ?, ?, ?, " +

"?, ?, ?, ?, ?, " +

"?"

+ ")"

);

try {

Geometry EPSG4326_geometry = null;

try {

EPSG4326_geometry = transformGeometry(geometry, srsName, "EPSG:4326");

} catch (SpatialIndexException e) {

//Transforms the exception into an SQLException.

SQLException ee = new SQLException(e.getMessage());

ee.initCause(e);

throw ee;

}

/*DOCUMENT_URI*/ ps.setString(1, doc.getURI().toString());

/*NODE_ID_UNITS*/ ps.setInt(2, nodeId.units());

byte[] bytes = new byte[nodeId.size()];

nodeId.serialize(bytes, 0);

/*NODE_ID*/ ps.setBytes(3, bytes);

/*GEOMETRY_TYPE*/ ps.setString(4, geometry.getGeometryType());

/*SRS_NAME*/ ps.setString(5, srsName);

/*WKT*/ ps.setString(6, wktWriter.write(geometry));

/*WKB*/ ps.setBytes(7, wkbWriter.write(geometry));

/*MINX*/ ps.setDouble(8, geometry.getEnvelopeInternal().getMinX());

/*MAXX*/ ps.setDouble(9, geometry.getEnvelopeInternal().getMaxX());

/*MINY*/ ps.setDouble(10, geometry.getEnvelopeInternal().getMinY());

/*MAXY*/ ps.setDouble(11, geometry.getEnvelopeInternal().getMaxY());

/*CENTROID_X*/ ps.setDouble(12, geometry.getCentroid().getCoordinate().x);

/*CENTROID_Y*/ ps.setDouble(13, geometry.getCentroid().getCoordinate().y);

/*AREA*/ ps.setDouble(14, geometry.getArea());

/*EPSG4326_WKT*/ ps.setString(15, wktWriter.write(EPSG4326_geometry));

/*EPSG4326_WKB*/ ps.setBytes(16, wkbWriter.write(EPSG4326_geometry));

/*EPSG4326_MINX*/ ps.setDouble(17, EPSG4326_geometry.getEnvelopeInternal().getMinX());

/*EPSG4326_MAXX*/ ps.setDouble(18, EPSG4326_geometry.getEnvelopeInternal().getMaxX());

/*EPSG4326_MINY*/ ps.setDouble(19, EPSG4326_geometry.getEnvelopeInternal().getMinY());

/*EPSG4326_MAXY*/ ps.setDouble(20, EPSG4326_geometry.getEnvelopeInternal().getMaxY());

/*EPSG4326_CENTROID_X*/ ps.setDouble(21, EPSG4326_geometry.getCentroid().getCoordinate().x);

/*EPSG4326_CENTROID_Y*/ ps.setDouble(22, EPSG4326_geometry.getCentroid().getCoordinate().y);

//EPSG4326_geometry.getRepresentativePoint()

/*EPSG4326_AREA*/ ps.setDouble(23, EPSG4326_geometry.getArea());

//For empty Curves, isClosed is defined to have the value false.

/*IS_CLOSED*/ ps.setBoolean(24, !geometry.isEmpty());

/*IS_SIMPLE*/ ps.setBoolean(25, geometry.isSimple());

//Should always be true (the GML SAX parser makes a too severe check)

/*IS_VALID*/ ps.setBoolean(26, geometry.isValid());

return (ps.executeUpdate() == 1);

} finally {

if (ps != null)

ps.close();

//Let's help the garbage collector...

geometry = null;

}

}

The generated SQL statement should be straightforward. We make a heavy use of the

methods provided by com.vividsolutions.jts.geom.Geometry, both on

the "native" Geometry and on its EPSG:4326 transformation. Would

could probably store other properties here (like, e.g. the geometry's boundary).

Other IndexWorkers, especially those accessing a

spatially-enabled DBMS, might prefer to store fewer properties if they can be

computed dynamically at a cheap price.

The next method is even much easier to understand:

protected boolean removeDocumentNode(DocumentImpl doc, NodeId nodeId, Connection conn)

throws SQLException {

PreparedStatement ps = conn.prepareStatement(

"DELETE FROM " + GMLHSQLIndex.TABLE_NAME +

" WHERE DOCUMENT_URI = ? AND NODE_ID_UNITS = ? AND NODE_ID = ?;"

);

ps.setString(1, doc.getURI().toString());

ps.setInt(2, nodeId.units());

byte[] bytes = new byte[nodeId.size()];

nodeId.serialize(bytes, 0);

ps.setBytes(3, bytes);

try {

return (ps.executeUpdate() == 1);

} finally {

if (ps != null)

ps.close();

}

}

This one even more so:

protected int removeDocument(DocumentImpl doc, Connection conn)

throws SQLException {

PreparedStatement ps = conn.prepareStatement(

"DELETE FROM " + GMLHSQLIndex.TABLE_NAME + " WHERE DOCUMENT_URI = ?;"

);

ps.setString(1, doc.getURI().toString());

try {

return ps.executeUpdate();

} finally {

if (ps != null)

ps.close();

}

}

This one however, is a little bit trickier:

protected int removeCollection(Collection collection, Connection conn)

throws SQLException {

PreparedStatement ps = conn.prepareStatement(

"DELETE FROM " + GMLHSQLIndex.TABLE_NAME + " WHERE SUBSTRING(DOCUMENT_URI, 1, ?) = ?;"

);

ps.setInt(1, collection.getURI().toString().length());

ps.setString(2, collection.getURI().toString());

try {

return ps.executeUpdate();

} finally {

if (ps != null)

ps.close();

}

}

This is because it makes use of a SQL function to filter the correct documents.

The two next methods are straightforward, now that we have explained that

Connections had to be requested from the Index

to avoid concurrency problems on an embedded HSQLDB instance.

protected Connection acquireConnection()

throws SQLException {

return index.acquireConnection(this.broker);

}

protected void releaseConnection(Connection conn)

throws SQLException {

index.releaseConnection(this.broker);

}

The next method is much more interesting. This is where is the core of the spatial index is:

protected NodeSet search(DBBroker broker, NodeSet contextSet, Geometry EPSG4326_geometry, int spatialOp, Connection conn)

throws SQLException {

String extraSelection = null;

String bboxConstraint = null;

switch (spatialOp) {

//BBoxes are equal

case SpatialOperator.EQUALS:

bboxConstraint = "(EPSG4326_MINX = ? AND EPSG4326_MAXX = ?)" +

" AND (EPSG4326_MINY = ? AND EPSG4326_MAXY = ?)";

break;

//Nothing much we can do with the BBox at this stage

case SpatialOperator.DISJOINT:

//Retrieve the BBox though...

extraSelection = ", EPSG4326_MINX, EPSG4326_MAXX, EPSG4326_MINY, EPSG4326_MAXY";

break;

//BBoxes intersect themselves

case SpatialOperator.INTERSECTS:

case SpatialOperator.TOUCHES:

case SpatialOperator.CROSSES:

case SpatialOperator.OVERLAPS:

bboxConstraint = "(EPSG4326_MAXX >= ? AND EPSG4326_MINX <= ?)" +

" AND (EPSG4326_MAXY >= ? AND EPSG4326_MINY <= ?)";

break;

//BBox is fully within

case SpatialOperator.WITHIN:

bboxConstraint = "(EPSG4326_MINX >= ? AND EPSG4326_MAXX <= ?)" +

" AND (EPSG4326_MINY >= ? AND EPSG4326_MAXY <= ?)";

break;

//BBox fully contains

case SpatialOperator.CONTAINS:

bboxConstraint = "(EPSG4326_MINX <= ? AND EPSG4326_MAXX >= ?)" +

" AND (EPSG4326_MINY <= ? AND EPSG4326_MAXY >= ?)";

break;

default:

throw new IllegalArgumentException("Unsupported spatial operator:" + spatialOp);

}

PreparedStatement ps = conn.prepareStatement(

"SELECT EPSG4326_WKB, DOCUMENT_URI, NODE_ID_UNITS, NODE_ID" + (extraSelection == null ? "" : extraSelection) +

" FROM " + GMLHSQLIndex.TABLE_NAME +

(bboxConstraint == null ? "" : " WHERE " + bboxConstraint) + ";"

);

if (bboxConstraint != null) {

ps.setDouble(1, EPSG4326_geometry.getEnvelopeInternal().getMinX());

ps.setDouble(2, EPSG4326_geometry.getEnvelopeInternal().getMaxX());

ps.setDouble(3, EPSG4326_geometry.getEnvelopeInternal().getMinY());

ps.setDouble(4, EPSG4326_geometry.getEnvelopeInternal().getMaxY());

}

ResultSet rs = null;

NodeSet result = null;

try {

int disjointPostFiltered = 0;

rs = ps.executeQuery();

result = new ExtArrayNodeSet(); //new ExtArrayNodeSet(docs.getLength(), 250)

while (rs.next()) {

DocumentImpl doc = null;

try {

doc = (DocumentImpl)broker.getXMLResource(XmldbURI.create(rs.getString("DOCUMENT_URI")));

} catch (PermissionDeniedException e) {

LOG.debug(e);

//Ignore since the broker has no right on the document

continue;

}

//contextSet == null should be use to scan the whole index

if (contextSet == null || contextSet.getDocumentSet().contains(doc.getDocId())) {

NodeId nodeId = new DLN(rs.getInt("NODE_ID_UNITS"), rs.getBytes("NODE_ID"), 0);

NodeProxy p = new NodeProxy((DocumentImpl)doc, nodeId);

if (contextSet == null || contextSet.get(p) != null) {

boolean geometryMatches = false;

if (spatialOp == SpatialOperator.DISJOINT) {

//No BBox intersection : obviously disjoint

if (rs.getDouble("EPSG4326_MAXX") < EPSG4326_geometry.getEnvelopeInternal().getMinX() ||

rs.getDouble("EPSG4326_MINX") > EPSG4326_geometry.getEnvelopeInternal().getMaxX() ||